Responsible AI

Responsible AI helps organisations innovate at speed with confidence knowing that the right governance is in place to realise value, manage emerging risks and comply with upcoming regulation.

What EY can do for you

The transformational benefits of Artificial Intelligence (AI) and Generative AI (GenAI) are well established, but what is becoming an increasing global priority is understanding and addressing the risks that these technologies bring. Bias, a lack of transparency, explanation, accountability and failure to comply with existing laws such as Intellectual Property and copyright can undermine trust in AI and negatively impact user adoption and realisation of the benefits.

Our responsible AI services help organisations innovate safely and provide confidence that AI and GenAI technologies are developed and managed ethically, transparently and sustainably and that potential regulatory and reputational harms are identified and mitigated.

Our strategy spans the entire lifecycle of AI projects, including design, development, deployment and monitoring and includes training and awareness programs, aimed at educating stakeholders about the nuances and implications of AI technologies. This foundational step is crucial for fostering an informed and responsible approach towards AI development and deployment. EY teams can help you craft comprehensive AI strategies, exploring the art of the possible to deliver business goals responsibly.

There are unique challenges associated with GenAI and prioritising the most valuable use cases is key. Our GenAI governance framework helps you to ensure that duplication is avoided and that use cases align with your organisational and technological readiness, business goals, organisational values and propensity for risk.

Our approach to Responsible AI governance is robust but pragmatic, building on existing organisational governance including third-party risk management, cybersecurity and data governance, helping to ensure that technologies are not only innovative, but also aligned with broader societal values and ethical principles.

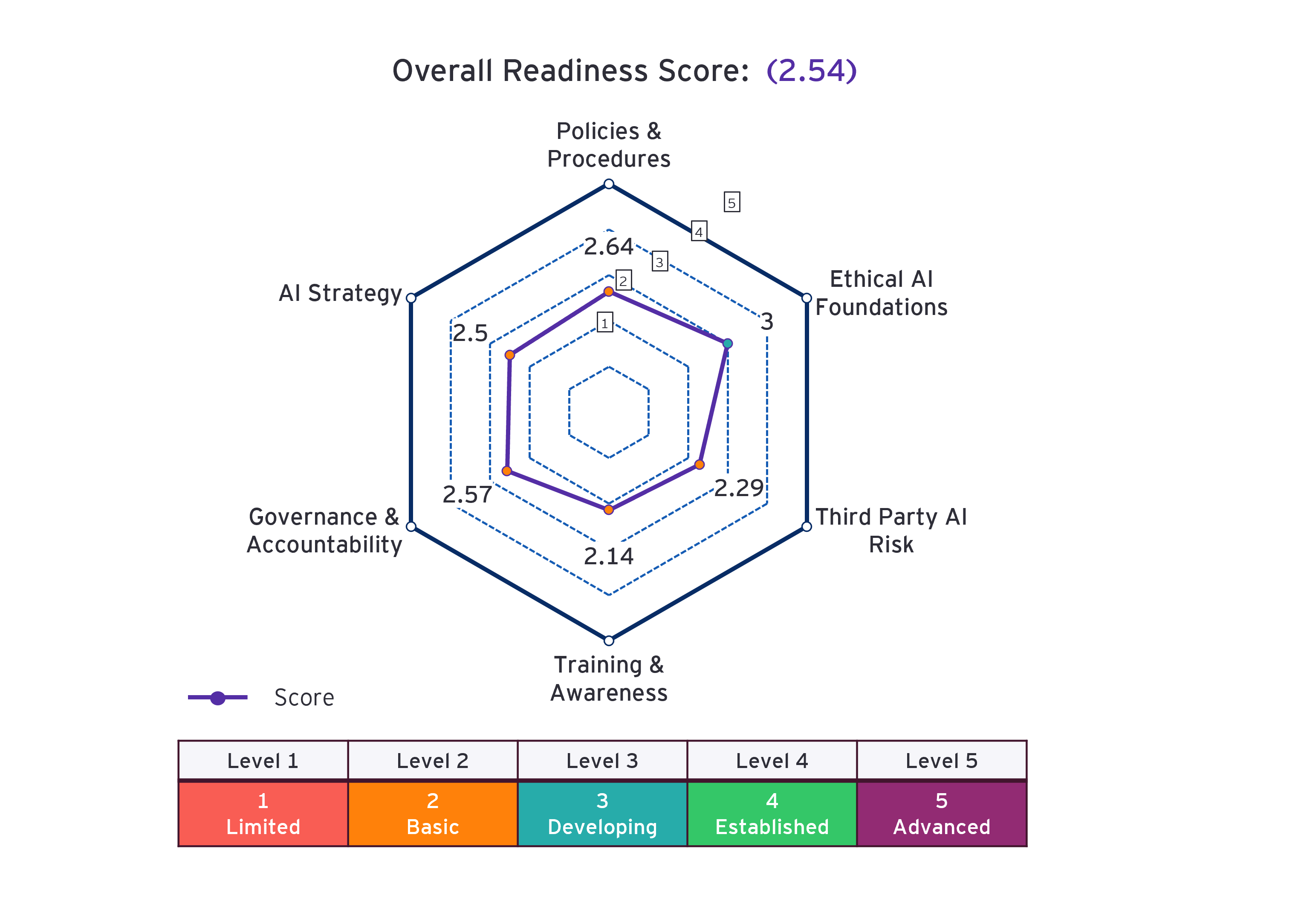

To get started, our Responsible AI Readiness Assessment provides a snapshot of your organisation’s readiness to manage AI risk and comply with forthcoming regulation. Our aim is to quickly identify gaps to address and provide a clear and pragmatic path forward.

The assessment measures readiness across six categories:

- AI strategy

- Policies and procedures

- Governance and accountability

- Training and awareness

- Ethical AI foundations

- Third-party risk

A report is produced outlining maturity levels in each area with next steps and a roadmap linked to the EU AI Act requirements.

We work collaboratively with you and your business stakeholders to discuss findings and agree a practical pathway forward based on risk exposure and existing projects.

Example snapshot view of AI readiness for the EU AI Act:

How a global biopharma became a leader in ethical AI

EY teams used the global responsible AI framework to help a biopharma optimize AI governance, mitigate AI risks and protect stakeholders.

A detailed independent review of AI governance

EY teams collaborated with the biopharma to review its approach to AI ethics using the global responsible AI framework.

Driving responsible AI to reduce risk to stakeholders

EY teams helped the biopharma safeguard stakeholders, including the public, from AI ethical issues.

The team

Our latest thinking

Contact us

Interested in the changes we have made here,

contact us to find out more.