One way of navigating that new and highly dynamic landscape is by embracing responsible and ethical AI standards that will not only meet but exceed the new requirements being imposed by regulators.

Many regulated organisations already adopt this approach in other areas of their operations and are now applying it to their use of AI and GenAI systems. However, other organisations with fewer regulatory obligations may find that quite challenging. But there are some key actions they can take to ensure the responsible use of AI.

Understand what AI means in your organisation

It’s important that organisations recognise the role AI plays in enhancing business processes, decision-making, and customer experiences by providing clear guidance and real-world use cases. This process of understanding what AI means in the organisation should include an inventory of AI tools currently in use and those that the organisation intends to buy or build in future. It should also address how the organisation will source data ethically and how it will be used. This will form the basis for what is monitored and reported on.

Develop an AI strategy that delivers value

Achieving responsible AI begins with embedding a value-driven and sustainable AI strategy within the organisation’s culture, with humans at the centre. Staff at all levels must be educated continuously on the importance of responsible and ethical AI practices to ensure alignment with evolving AI standards.

Create a robust governance structure

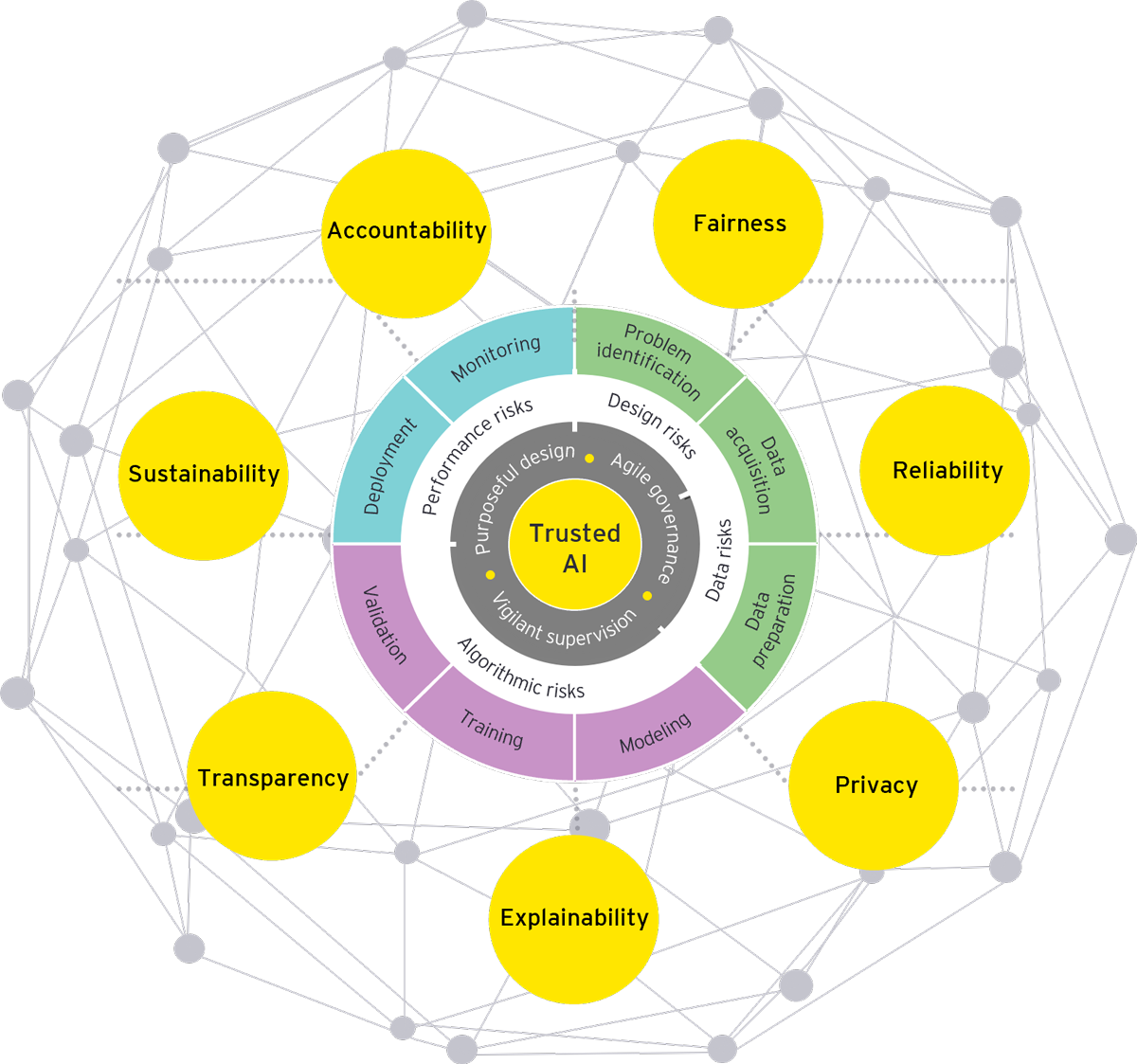

AI governance frameworks should embrace responsible AI principles including transparency, accountability, fairness, explainability, reliability and privacy.

EY Responsible AI Framework

Robust controls should be established and implemented while processes to manage risks and streamline reporting to regulators and other stakeholders should be put in place. These processes should be underpinned by a robust monitoring framework to detect and mitigate risks as AI systems evolve over time. This will help maintain adherence to established risk thresholds and prevent unintended outcomes.

Innovate within compliance framework

Among the key challenges in deploying AI is the need to ensure regulatory compliance while also achieving a commercial return on investment. This requires robust quality assurance processes which will ensure that the AI outputs are reliable while the overall governance framework will ensure the ethical use of the technology. However, these processes and frameworks should be constructed in ways that support safe innovation rather than stifle it. Furthermore, the increased use of GenAI will likely mean that established AI governance processes and frameworks will need to be updated.

Summary

With the rapid growth in AI and GenAI use, organisations need to embrace responsible and ethical AI standards if they are to keep pace with both advances in the technology and the evolving regulatory landscape. Adherence to responsible AI practices and standards will ensure transparency, fairness, and human centricity. This approach will help organisations tap into AI’s enormous commercial benefits while complying with existing and new regulations.

EY.ai - a unifying platform

A platform that unifies human capabilities and artificial intelligence to help you drive AI-enabled business transformations.