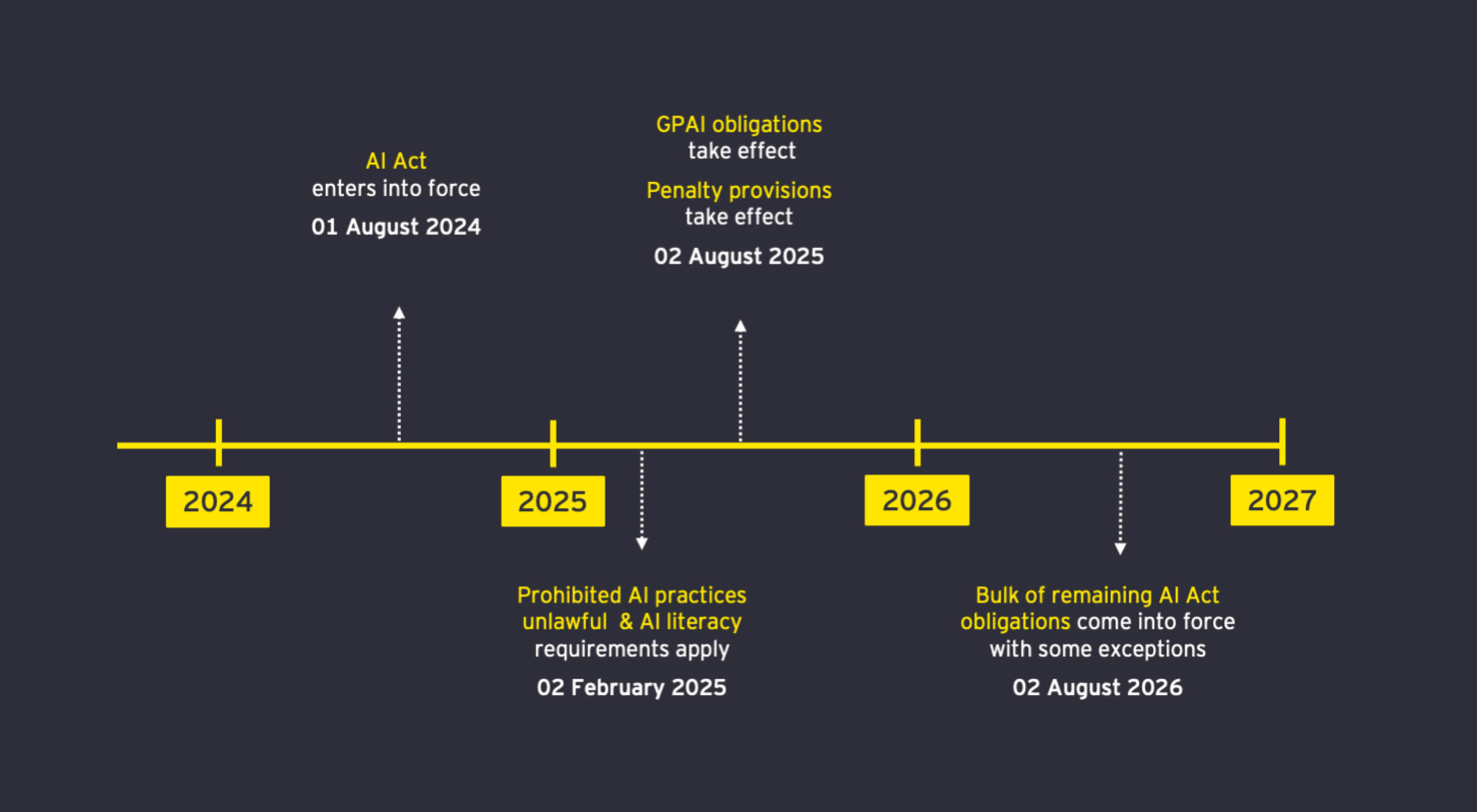

On 1 August 2024 the European Union's Artificial Intelligence Act (“AI Act”) entered into force. This starts the clock on obligations taking effect under the AI Act. The first obligations come into force within months and organisations need to be ready.

The AI Act is designed to establish a harmonised legal framework for the regulation of certain types of AI systems and general-purpose AI models. The publication of the AI Act marks a new era of digital governance within the EU regulating the use of AI within the single market for the first time. As organisations across the EU begin to grapple with the implications of the AI Act, understanding the timeline and obligations it imposes is crucial.

The AI Act carries with it the potential for significant fines for non-compliance with the highest potential fines being 7% of worldwide turnover or €35,000,000.

The AI Act places obligations on those who are working with AI systems and GPAI models. Both deployers and providers of AI systems have extensive obligations, however the bulk of the obligations lie with providers. Providers are generally the developer of the AI system while the deployer is generally the organisation that uses an AI system under their own authority. However, the AI Act also imposes obligations on others including importers of AI systems and distributors. The definition of distributor will capture those who resell products in the EU that contain AI systems.

The AI Act categorises the various uses of AI systems into different levels of risk. The AI Act specifically defines the categories of prohibited, high-risk and GPAI. While not explicitly set out, there are additional obligations which we have categorised as limited and low risk. AI systems falling into the low-risk category can choose to comply with voluntary codes of conduct. AI systems in the limited risk category have certain transparency obligations. The highest level of risk is deemed unacceptable, these systems are prohibited under the AI Act.